The AWS outage isn't your fault. The blast radius is!

The October 2025 AWS outage took down thousands of services. Learn how to architect resilient distributed systems with circuit breakers, bulkheads, and fault isolation to minimize blast radius when cloud providers fail.

On October 20th, AWS services in us-east-1 experienced significant disruptions. Within hours, thousands of applications went dark. Status pages turned red. Engineers woke up from alarms they didn't set. But here's the thing: you couldn't prevent AWS from going down, but you could have prevented your entire application from going down with it.

The outage itself wasn't your fault. But the blast radius? That's on your architecture.

What Actually Happened

When AWS infrastructure fails, the impact spreads like wildfire through tightly coupled systems. A DynamoDB slowdown cascades into API timeouts. API timeouts trigger retries. Retries exhaust connection pools. Suddenly, an isolated infrastructure problem has become a complete system failure.

If your entire infrastructure lives in us-east-1, circuit breakers won't save you from a regional outage, you need multi-region architecture for that. But most teams aren't facing total regional collapse. Instead, they're dealing with:

- Partial service degradation: Some AWS services fail while others remain operational

- Third-party dependencies: External APIs, payment processors, analytics services

- Internal microservices: Authentication services, recommendation engines, reporting systems

- Non-critical features: Chat widgets, in-app notifications, A/B testing platforms

This is where smart fault isolation transforms a potential disaster into a manageable incident.

Understanding Blast Radius

Think of blast radius as the scope of collateral damage from a single failure. In distributed systems, failures are inevitable. The question isn't whether something will break, it's how much breaks when it does.

Consider a typical e-commerce platform with these dependencies:

┌─────────────────────────────────────────┐

│ E-commerce Platform │

├─────────────────────────────────────────┤

│ • Product Catalog (DynamoDB) │

│ • Payment Processing (Stripe) │

│ • Recommendations (Internal Service) │

│ • Analytics (Third-party) │

│ • Email Notifications (SendGrid) │

│ • Search (Elasticsearch) │

└─────────────────────────────────────────┘Without fault isolation, when recommendations service goes down:

- API calls hang for 30+ seconds waiting for timeouts

- Thread pools fill with blocked requests

- New customer requests get queued

- Eventually, the entire platform becomes unresponsive

- Customers can't even browse products, let alone buy

With fault isolation, when recommendations service fails:

- Circuit breaker detects failures within seconds

- Further calls to recommendations fail fast (< 1ms)

- Customers browse products without recommendations

- Checkout and payments continue normally

- Impact: One missing feature instead of complete outage

The blast radius shrinks from "entire platform down" to "recommendations temporarily unavailable."

The Circuit Breaker Pattern: Your First Line of Defense

Circuit breakers work exactly like electrical circuit breakers in your home. When something goes wrong, they trip to prevent cascading damage. In software, they monitor calls to remote services and stop making requests when failures exceed a threshold.

The Three States

A circuit breaker operates in three states:

1. Closed (Normal Operation)

All requests pass through to the dependency. The breaker monitors failure rates.

2. Open (Fast-Fail Mode)

When failures exceed your threshold (e.g., 50% error rate over 10 seconds), the breaker trips. All subsequent requests fail immediately without attempting the call.

This is crucial during outages. Instead of each request waiting 30 seconds for a timeout, you fail in microseconds. Your threads stay free. Your users get fast error responses.

3. Half-Open (Testing Recovery)

After a timeout period (e.g., 30 seconds), the breaker allows a few test requests through. If they succeed, the breaker closes and normal operation resumes. If they fail, the breaker opens again.

Real-World Example

Let's implement a circuit breaker for that recommendations service:

import { OpenFuse } from "@openfuse/sdk"

const openfuse = new OpenFuse({

apiKey: process.env.OPENFUSE_API_KEY,

})

// In your API handler

app.get("/api/products/:id", async (req, res) => {

const product = await getProduct(req.params.id)

// Protected call with automatic circuit breaking

const recommendations = await openfuse.withBreaker(

"recommendations-api",

async () => {

return await fetchRecommendations(req.user.id)

},

{

fallback: () => getPopularProducts(), // Return popular items when recommendations fail

timeout: 3000, // Fail if call takes > 3s

},

)

res.json({

product,

recommendations, // Either real or fallback

})

})During the AWS outage:

- First few recommendation calls timeout (3s each)

- Circuit breaker detects 50% failure rate and trips to open state

- Subsequent calls fail in < 1ms and return popular products

- After 30s, breaker tests if service recovered

- Your product pages stay fast and functional throughout

Beyond Circuit Breakers: The Bulkhead Pattern

Circuit breakers prevent cascading failures. Bulkheads contain them.

Named after the watertight compartments in ships, the bulkhead pattern isolates resources so failure in one area doesn't sink the entire ship. In Node.js, this means limiting concurrent operations to prevent one slow dependency from monopolizing your event loop.

The Problem

When analytics becomes slow (5s per call), 100 requests/second creates 500+ concurrent operations. Each holds memory and connections. Your event loop becomes congested. Product requests timeout. Your entire API dies even though your core services work fine.

The Solution

import pLimit from "p-limit"

// Create separate concurrency limits (bulkheads)

const analyticsLimit = pLimit(5) // Max 5 concurrent analytics calls

const loggingLimit = pLimit(10) // Max 10 concurrent logging calls

const mlLimit = pLimit(3) // Max 3 concurrent ML calls

app.get("/api/products/:id", async (req, res) => {

const product = await getProduct(req.params.id)

// Fire-and-forget with concurrency limits

analyticsLimit(() => trackPageView(req)).catch(logError)

loggingLimit(() => logEvent("view", req)).catch(logError)

mlLimit(() => updateRecommendations(req.user)).catch(logError)

res.json({ product })

})Now only 5 analytics calls run concurrently. Additional requests queue in memory. Other services have their own limits and stay unaffected. Product requests return in under 100ms.

Impact: Analytics tracking is delayed/dropped, but customers browse normally.

Choosing Limits

- Non-critical (analytics, logging): 5-10 concurrent requests

- User-facing (recommendations, reviews): 10-20 concurrent requests

- Critical (payments, auth): Higher limits or no limits, use circuit breakers instead

The Challenge of Distributed Circuit Breaking

So far, we've discussed circuit breakers within a single application. But modern systems have a problem: distributed state.

Imagine you have 20 instances of your API service. When a dependency fails:

- Each instance maintains its own circuit breaker state

- Each instance independently discovers the failure (20× the failed requests)

- Each instance separately opens its circuit breaker

- Each instance independently tests recovery

This works, but it's inefficient. During those first critical seconds of an outage, you're sending 20× more failure traffic than necessary. When testing recovery, you're creating 20× more test traffic than needed.

Even worse: circuit breaker state is ephemeral. Every time you:

- Deploy new code

- Auto-scale and spawn new instances

- Restart a crashed service

- Perform a rolling update

Your circuit breakers reset to closed state. That new instance doesn't know the recommendation service has been failing for the last 10 minutes. It has to discover the failure independently, sending more doomed requests to an already-struggling dependency. During an active incident, deployments and auto-scaling events force your system to re-learn failures it already discovered.

And there's no control during incidents. With in-process circuit breakers:

- No manual intervention: You can't manually open a circuit when you know a dependency is down. You have to wait for failures to accumulate.

- No emergency override: A vendor tells you their API will be degraded for 2 hours? You can't proactively trip the breaker. Your system has to learn through failures.

- Policy changes require deploys: Need to adjust timeout from 3s to 5s during an incident? That's a code change, build, and deployment. During an outage. Good luck.

- No visibility: Which circuits are open right now? You'd need to dig into logs or metrics.

Enter Centralized Circuit Breaking

This is where a centralized circuit breaker platform becomes valuable. Instead of each instance learning about failures independently, they share state:

Traditional (Distributed):

────────────────────────────

Instance 1 → [Circuit Breaker 1] → Service X (failing)

Instance 2 → [Circuit Breaker 2] → Service X (failing) ← Learning independently

Instance 3 → [Circuit Breaker 3] → Service X (failing)

...

Instance 20 → [Circuit Breaker 20] → Service X (failing)Centralized:

────────────────────────────

Instance 1 ┐

Instance 2 ├→ [OpenFuse] → Service X (failing)

Instance 3 │ ↓

... │ [Shared Circuit State]

Instance 20┘

↓

All instances instantly know: "Service X is down"Benefits:

- Faster detection: One instance discovers the failure, all benefit

- Reduced load: No redundant failure testing across instances

- Coordinated recovery: Single half-open test instead of N simultaneous tests

- Global visibility: See circuit state across your entire infrastructure

- Policy management: Update thresholds without redeploying code

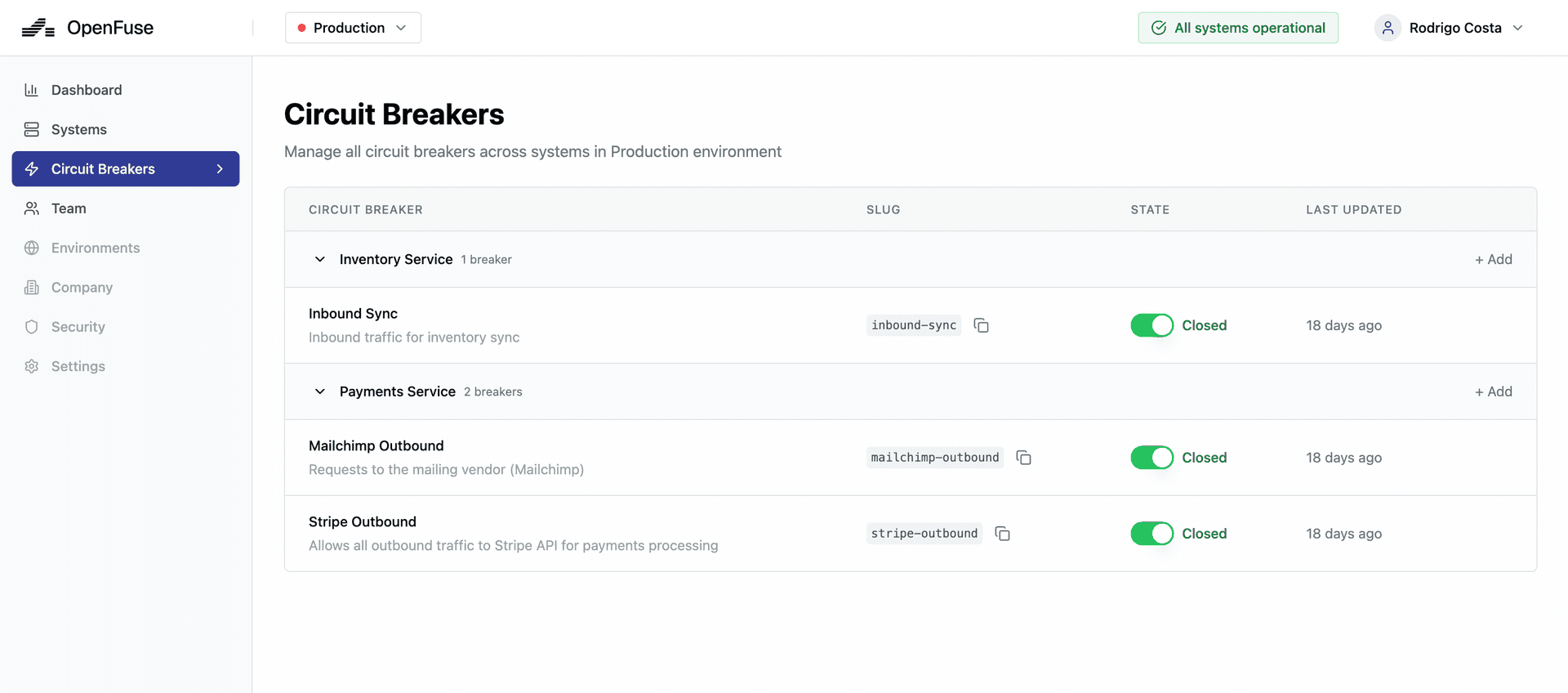

Meet OpenFuse: Centralized Circuit Breaking for Modern Teams

OpenFuse provides a centralized circuit breaker service that your applications can integrate in minutes. Instead of managing circuit breaker libraries, configurations, and state across dozens of services, you get a single control plane for all fault isolation.

How It Works

import { OpenFuse } from "@openfuse/sdk"

const openfuse = new OpenFuse()

// Protected call with centralized circuit breaking

const data = await openfuse.withBreaker(

"recommendations-service",

async () => {

return await fetchRecommendations(userId)

},

{

fallback: () => getPopularProducts(),

timeout: 3000,

},

)That's it. OpenFuse handles:

- ✓ Monitoring failure rates across all your instances

- ✓ Opening/closing circuits based on real-time health (with manual override)

- ✓ Coordinating recovery testing

- ✓ Live dashboards showing circuit status across all services

- ✓ Alerting when circuits open

- ✓ Adjusting policies (timeouts, thresholds) without code deploys

- ✓ Persistent state across deploys and auto-scaling events

- ✓ Exporting metrics to your observability stack

During an Outage

When AWS services degrade, here's what happens with OpenFuse:

- Instant Detection: Your first instance sees failures, reports to OpenFuse

- Coordinated Response: OpenFuse opens the circuit globally within milliseconds

- All Instances Protected: Every service instance immediately starts failing fast

- Smart Recovery: OpenFuse periodically tests the dependency from a single point

- Automatic Restoration: When health returns, circuits close automatically

Real-World Impact

Teams using centralized circuit breaking report:

- Reduction in incident duration: Failures contained to specific features instead of cascading

- Higher availability: Better overall uptime despite dependency failures

- Faster incident response: Engineers see which circuits are open before customers complain

- Zero manual intervention: Most incidents self-heal as dependencies recover

Getting Started

OpenFuse offers a generous free tier perfect for startups and small teams:

- Up to 100,000 circuit breaker evaluations/month

- Unlimited services and circuit breakers

- Real-time dashboard and metrics

- Slack/PagerDuty integrations

For larger teams, paid plans include advanced features like:

- Custom fallback strategies per environment

- A/B testing fallback approaches

- Automatic anomaly detection

- 99.99% SLA on circuit evaluation

Practical Resilience: A Checklist for Your Architecture

Here's how to audit your system and reduce blast radius:

1. Identify Your Dependencies

Map every external call your system makes:

- Cloud services (databases, queues, storage)

- Third-party APIs (payments, auth, analytics)

- Internal microservices

- Feature flags, A/B testing platforms

2. Classify by Criticality

For each dependency, ask: "Can we still serve customers if this fails?"

Critical (requires circuit breaker + fallback):

- Payment processing

- Product catalog

- Authentication

- Shopping cart

Non-critical (requires circuit breaker + graceful degradation):

- Recommendations

- Reviews

- Analytics

- Social sharing

Async (use message queues, retry later):

- Email notifications

- Report generation

- Data exports

3. Implement Circuit Breakers

Start with your non-critical dependencies, they're safer to experiment with:

import { OpenFuse } from "@openfuse/sdk"

const openfuse = new OpenFuse()

// Non-critical: Reviews

const reviews = await openfuse.withBreaker(

"reviews-api",

async () => {

return await fetchReviews(productId)

},

{

fallback: () => [], // Empty array if unavailable

timeout: 2000,

},

)

// Critical: Payments

const payment = await openfuse.withBreaker(

"payment-api",

async () => {

return await processPayment(orderId)

},

{

fallback: () => queuePayment(orderId), // Queue for later processing

timeout: 5000,

},

)4. Add Bulkheads

Limit concurrent operations for different service tiers using p-limit:

import pLimit from "p-limit"

const bulkheads = {

critical: pLimit(50), // Core features: payments, auth, catalog

standard: pLimit(20), // User-facing: recommendations, reviews

background: pLimit(5), // Fire-and-forget: analytics, logging

}5. Test Your Failure Modes with Chaos Engineering

You've implemented circuit breakers and bulkheads. But do they actually work? The only way to know is to deliberately break things in a controlled environment.

This is chaos engineering: intentionally injecting failures to verify your resilience patterns work before production outages test them for you. Netflix pioneered this approach with their famous Chaos Monkey tool, which randomly terminates production instances to ensure systems can handle failures.

What to test:

-

Latency injection: Slow down a dependency from 100ms to 5 seconds. Do your timeouts fire? Do circuit breakers open? Does the user experience degrade gracefully or break completely?

-

Service failures: Make a dependency return 100% errors. Do circuit breakers trip quickly? Do fallbacks activate? Can users still complete their primary tasks?

-

Partial failures: Simulate 50% error rates or intermittent slowdowns. This is often harder to handle than complete failures, does your system thrash or stabilize?

-

Resource exhaustion: Max out your bulkhead limits. Do requests queue appropriately? Do other services remain unaffected?

Start small, then expand:

Begin in development, then staging, then, when you're confident, controlled production experiments. Modern chaos engineering platforms like Gremlin and Chaos Mesh make this safer with blast radius controls, automatic rollbacks, and scheduled experiments.

The goal isn't to break things for fun. It's to discover your actual failure modes in low-stakes situations, fix them, and build confidence that when AWS goes down at 3am, your systems will degrade gracefully instead of catastrophically.

Pro tip: Document what happens during each chaos experiment. These scenarios become your runbooks for real incidents. When analytics goes down in production, you'll already know the impact (delayed tracking, users unaffected) because you tested it last Tuesday.

The Next Outage is Coming

Let's be realistic: AWS will have another outage. So will your payment processor, your analytics provider, and your internal microservices. Cloud providers don't offer 100% uptime because it's impossible.

But you can control your blast radius. You can architect systems that degrade gracefully instead of collapsing catastrophically. You can choose which features fail and which keep working.

The October 20th outage wasn't your fault. But if the next one takes down your entire platform when only one dependency failed, that's a choice you made with your architecture.

Start small:

- Pick your most problematic non-critical dependency

- Wrap it in a circuit breaker with a sensible fallback

- Test the failure mode in staging

- Deploy to production

- Monitor and adjust thresholds

- Repeat for the next dependency

Each circuit breaker you add shrinks your blast radius. Each fallback you implement keeps more customers happy during incidents. Each bulkhead you create isolates failures from spreading.

The cloud will fail again. Your dependencies will fail again. Will your system?

Ready to reduce your blast radius? OpenFuse makes circuit breaking simple, centralized, and effective. Start your free trial →